*We are now accepting new clients for the 2026-27 cycle! Sign up here.

X

In this episode of Status Check with Spivey, two experienced and respected law school Deans—Craig Boise, Dean of Syracuse University College of Law, and Daniel Rodriguez, former Dean of Northwestern University Pritzker School of Law—discuss the history, the present, and the future of law school admissions and legal education. Their conversation covers a range of topics, including problems with the LSAT, the recent test-optional proposal to the ABA, the impacts that the U.S. News law school rankings have had on legal education (and their thoughts on the new methodology changes), and a new pathway to law school admissions, JD-Next.

You can find basic information on JD-Next, as well as a list of schools that have been granted variances to accept JD-Next in lieu of another admissions test (LSAT or GRE), here.

Craig Boise is the Dean of Syracuse University College of Law, where he is currently completing his final year in that role, after which he will be working with colleges, universities, and law schools as a part of Spivey Consulting Group. He is a Member of the Council of the ABA Section on Legal Education, previously served on the ABA’s Standards Review Committee and the Steering Committee of the AALS’s Deans’ Forum, and served as Dean of Cleveland-Marshall College of Law. He holds a JD from the University of Chicago Law School and an LLM in Tax from NYU School of Law.

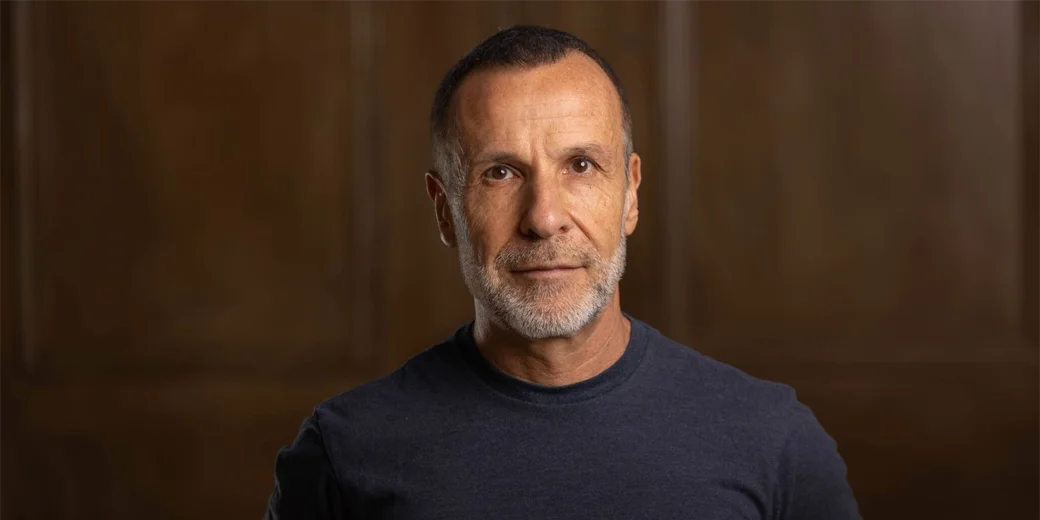

Daniel Rodriguez is a current professor and former Dean of Northwestern University Pritzker School of Law. He served as President of the Association of American Law Schools (AALS) in 2014 and served as Dean of the University of San Diego School of Law from 1998 to 2005. He holds a JD from Harvard Law School.

Mike: Welcome to Status Check with Spivey where we talk about life, law school, law school admissions, a little bit of everything. Today's topic is going to be, not just law school admissions, but possibly a good part of the future of law school admissions. What are sort of the new structures of the application process, particularly maybe in more innovative or different forms of standardized or admissible tests to enter law school? It's from a different lens, it's from a global lens. So we have a current Dean, Craig Boise, serving more as the host, and a former Dean, Dan Rodríguez, serving more as the guest, particularly because Dan has a good knowledge of JD-Next, which is a good part of this podcast. Dan is currently a professor at Northwestern School of Law. He was formally from 2012 to 2018, the Dean of Northwestern Law, known as a very innovative dean, incredibly well-known and well-regarded just like Craig, very well-regarded in the space. He's been a Dean for a long time at two different law schools, as I mentioned currently at Syracuse Law. Craig's been a member of the council of the ABA Section on Legal Education. Craig previously served on the ABA Standards of Review Committee and the steering committee of AALS. I could go on into their bonafides for the next 15 minutes. Let me try to give a brief overview of what they're talking about because it's really fascinating. We don't jump into what is JD-Next.

If you want to know what is JD-Next, and what are the predictions of how universal it’ll become in the admissions process, there are no predictions of how universal it will become, to be determined. About halfway through the podcast, Dan and Craig get very much into depth on what JD-Next is, and how it differs from a typical standardized test. But the first half, which I found also equally interesting, is, all right, why does the American Bar Association require standardized tests as valid and reliable? And measurement matters, I'm speaking for myself now, not Dan and Craig, although I think they echo this well. You want some form of measurement. Then the question becomes, why have we had a monopolistic test, the LSAT, as the only metric for measurement for so many years? A lot has to do with U.S. News & World Report. Although as Dan and Craig get into, the LSAT was just eviscerated in its weight for U.S. News rankings. And if you're a law school that's paying attention to rankings, previously you had to, and they talk about this, overweight the LSAT relative to its predictability. And now for the first time in a long time, you might not have to overweight the LSAT so much.

Given this opportunity, has there ever been innovative, different tests out there that might not just predict law school success in your first year relatively well? The LSAT does a moderately good job of predicting first-year law school performance. Every school is different, but you can think of it around the range of maybe 40 to 60 percent, I could be slightly off, of predicting your first-year GPA for law school. It does not do a good job, in fact it negatively correlates in some dimensions like Bill Henderson out of Indiana has shown to negatively correlate with networking. It does not do a good job of predicting what kind of lawyer you're going to be. It also horribly breaks down when you look at its ability for predictive value of test-taking scores for minorities and socio-economically challenged applicants.

So given these structural problems with the LSAT, why has it been so universally accepted? Well now, here's the time, credit to Marc Miller, who championed both the GRE as part of the admissions process - incidentally, as Dan and Craig, note the GRE translates almost as well as the LSAT for predicting law school first-year performance. But the next thing about JD-Next is per Dan’s research, for the research of the schools that have stress tested this and piloted this, not only does JD-Next predict equally well first-year law school performance, it gets rid of that bias against minority applicants, it gets rid of the bias against socio- and economically-challenged applicants. And to be determined, but because of the structure, you might very well see it doing a much better job of testing something that matters even more than first-year grade point percentile, but how you're going to do as a practicing lawyer.

It's a rather long intro to a rather deep discussion, I think a great discussion, so without further delay, let me hand it over to Craig and Dan.

Craig: Morning, this is Craig Boise, I am the Dean of the Law School at Syracuse University, and I am privileged to be able to chat this morning with Dan Rodriguez, who has a long list of accomplishments, but I know him as a former Dean at Northwestern. We've had multiple conversations about things related to legal education, collaborated in the past on a couple of initiatives related to change in legal education. Dan, it's great to be on with you this morning and get a chance to talk to you again.

Dan: Right back at you Craig, good to see you again, and congratulations, by the way. I know you're winding down on a fabulous tenure at Syracuse and congratulations for all you’ve accomplished and will still get to accomplish in the future in a different role.

Craig: Thanks Dan, I really appreciate that. I am looking forward to the first sabbatical of my 20-year academic career.

Dan: It's lovely, done it twice and it’s lovely.

Craig: Great. Well, this morning, the topic is something called JD-Next, a potential alternative to the Law School Admissions Test, and we'll talk a little bit more about that. But I think maybe just to kind of set the table here, going to law school is a major undertaking in and of itself, but getting into a law school in the first place is also a pretty significant undertaking. So maybe you can just provide at a high level what's currently required if someone wants to attend law school in addition to obviously applying to a law school and being admitted? What does that look like?

Dan: Sure, happy to. Let me begin within the United States, since of course different countries have their own rules, but focusing here on the USA. To be admitted to a law school accredited by the American Bar Association, that describes roughly about 200 law schools, there are other law schools that are free-standing, accredited by states. But for the purpose of this discussion, with all due respect to those schools, let's leave them to one side. Focusing on the ABA-accredited law schools, you need to be a graduate of an accredited college or university. There are, of course, many more than 200 of those, and usually that involves a BA or BS of some sort. And that's, I'll just say without going on a tangent, rather unique to US higher education and haven't always been the case, but it's now the case. So you're expected to have graduated from an accredited college or university.

And then the ABA accreditation standards, which govern, I don't need to tell you, but govern the regulation of law schools require you to submit - the magic language is a valid and reliable admissions test, for the law school to consider as a condition for your admissions. Now as we can get into it, and I suspect we will get into it, how the law school treats that admissions test is up to the school itself in an admissions scheme that I get to use the term that's favored, a holistic admissions scheme. It may count for a lot, it may count for a middle amount or little, but you have to take that test and submit it to a law school. It's not uncommon that a student will take the test, and when I say the test it’s by far the most typical test is the Law School Admissions Test, the LSAT, but now for the last few years there's also the option of taking the Graduate Record Exam, the GRE. And whether you take the LSAT or the GRE, whether you take one or both, or take it several times, at the end of the day what the law school has available is your undergraduate record, the fact that you graduated, and your GPA and everything like that, the Law School Admissions Test, and then of course whatever else is required that is distinct from school to school. In my experience, all law schools require you to submit letters of recommendation, the contents of which may differ from school to school. Many more schools nowadays than when you and I were applying to law school interview candidates, often with the availability of technology to put a face to the name as it were, and then consider whatever other criteria the school believes is valuable as part of their admissions.

Craig: The bottom line though is that the LSAT, the Law School Admissions Test, is required and a law school must require that of any applicant to law school. So tell me who administers and devices and comes up with the Law School Admissions Test?

Dan: The Law School Admissions Test has for many decades been a product for sale and for use to law schools by an organization called the Law School Admission Council, which is a nonprofit organization, and it’s located in Newtown, Pennsylvania. And it has a very large staff and it's led by a very able leader who you and I both know, Kellye Testy, who herself was a dean of a law school in the past. And the LSAC, not only administers the LSAT and gives students the opportunity to take it a number of times a year, but it also really administers a very large infrastructure of the admissions system that law schools typically use. We might call it the “under the hood” part of the admissions process, but very, very important, collecting the test and even providing a one-stop shopping for turning in of transcripts and all of that. But to answer your question directly, the test is administered by the Law School Admissions Council.

Craig: This ends up being a single kind of reel-it-in into law school. The road leads through the Law School Admissions Council.

Dan: Pretty much. I suppose it's worthwhile to put an asterisk here. Again, to highlight something I said a moment ago, which is the ABA put its stamp of approval maybe two or three years ago on an alternative test, the Graduate Record Exam, the GRE, which has been around at least as long as the LSAT. It’s the test that students who apply to graduate school in other areas other than law and business or medicine will typically take. And the ABA has said that too can be a valid and reliable admissions test.

I think quite frankly, though, the overwhelming majority of students who apply to law school are still taking the LSAT because that's just been the leading test and has been for quite a long time.

Craig: So the Law School Admissions Test itself, what does that test?

Dan: I'm smiling Craig, because I wouldn't say that it's in the eye of the beholder, that would be a bit glib, but I would say there's, you get a lot of different answers to that question from folks whether you're talking about deans or folks who work in admissions or the like. So let me try to pare it down to kind of its essence consensus.

What it purports to measure is the ability of students to succeed when they begin in law school. And I think that the psychometrics and the data that the LSAC collects and reports to the law schools with the benefit of course of data that's provided by the law schools, really deals with the relationship between your LSAT score and your success in the first year of law school. And so I think the data that you and I see as Deans every year that is quite quantitative, ultimately leads to the conclusion that the LSAT does a pretty good job at predicting first-year success. Now what they say by their own admission is that the best job is when you combine the LSAT and undergraduate grade point average, but as between the two, the LSAT has over a long period of time, this is also by the way true of the GRE, successfully predicts law school performance.

Let's be clear though, and I'm glad you asked the question. Because what it doesn't purport to measure is everything else. How successful will a student be by the time they graduate? The likelihood that they’ll pass the bar exam. Certainly, the likelihood they’ll go on to great fame and fortune and success in the legal profession. All of those are of course crucial criteria. Everyone wants to know if there's a magic test that illuminates that, but there isn't. And it doesn't advertise itself as a test that predicts the ability to be a good lawyer. It predicts your ability to succeed in the first year of law school, which of course is something quite important.

Craig: So let's unpack that just a bit. So the LSAT is a timed test of three hours or so. A standardized test, under pressure in a timed environment. The idea here is that this test, if completed successfully as an applicant to law school, suggests that you will be successful in taking a roughly three-hour timed, under-pressure exam in law school in the first year. So that doesn't seem to me to be terribly groundbreaking or surprising. The prediction here is fairly narrow.

Dan: Exactly right. And again, this is not by any means a back-handed criticism of the LSAT or any standardized admissions test. But two things to note, one is the kinds of questions that are asked traditionally, as they're tweaked from time to time, and there are changes in the weight and all of that. But they're basically tests that look at logical reasoning, reading comprehension, certain games and things like that. They're not pretty much the sum and substance of what you're actually doing when you're in law school.

So one question that gets asked is if you're giving a general, I don't think they call it a general aptitude test, that's maybe not the phrase they would use. But it is in large extent aptitude. How does that actually jive with the substance of what you'll learn in a law school? And I think the answer, it’s fair to say, is not that much. I once had someone who was an expert in psychometrics for decades say to me, “look, the nasty secret of all this is, you could take somebody's SAT score or ACT score, and it would probably be a good predictor of how you will do in the first year of law school.” Somebody's good in standardized tests, they're probably going to be along the way. So that's a little bit cynical, but I think it suggests just as you say that you're measuring basically apples and oranges. The apples are what you're being asked on the LSAT or the GRE, and the oranges as it were, of what you're actually doing when you're in the first year of law school.

Craig: And that predictive ability just to unpack that a little bit. Of course, there's a lot of math here behind all of this conversation we're having. But essentially the LSAT, if I'm correct, itss predictive ability for success in the first year depends on the school. The predictive ability varies from one law school to the next. And that predictability is somewhere between 49 and 65%, I think, which is a little better than a coin toss if you think of it that way. So what we have is a test that's required that has a marginal ability, certainly the best ability among the tests that are out there, but there aren't that many. And that may be the issue. But it predicts success only in the first year. So that's the landscape right now with the Law School Admissions Test. You mentioned that the GRE was approved. Can you talk just a little bit about the ABA Council? And I, in full disclosure, was a member of the ABA Council for three years. I just rolled off, my term expired in August, early August. I was from 2013 to 2018, five years on the Standards Review Committee at the ABA. So I was involved in these things in that capacity, just in the interest of disclosure. But what led to the GRE suddenly entering the picture?

Dan: While we're on the subject of disclosure, Craig, let me also make a disclosure, which is I was involved for some period of time as a strategic advisor/consultant for ETS, which is the organization that administers the GRE. So your listeners should know that as well, although I wasn't any part of the process of approving it or anything like that. And I should also say the psychometrics of it are a bit above my pay grade. I can't get so much into the weeds about the math and the science of this, although I've learned a lot in my time.

Craig: It’s why we became lawyers.

Dan: Yeah exactly, exactly, we weren’t good at math. This is the story in a nutshell, for a number of years, a number of law schools, again disclosure, including my law school Northwestern, had some permission, I think they called it a variance from the ABA to submit alternative admissions tests for a very narrow band of students. For example, we have a JD-MBA program, joint-degree program. And so some of the students applying to the MBA had taken the GMAT, which is the test that's typically given to business schools and some had taken the GRE. And so the ABA had granted us permission and a handful of others to consider that test for students to apply to law school. And I think a number of schools along with the ETS said after a while that maybe what we're learning is an alternative test in this case, the GRE, which is taken by tens of thousands of students, could actually have some pretty good predictive value in law school. And they ran the numbers and they found out that actually it predicts about as well as the LSAT does for admission for law school. And so what happened was we moved from a scheme in which individual schools were coming to the ABA, kind of hat in hand and asking for permission to use it for a small number of students. And instead, what was asked by schools in collaboration with the testing organization is to give more blanket approval to the use of the GRE.

The key admission standard as you well know, is you have to have a valid and reliable admissions test. And we all have long assumed that means it's the LSAT. But the LSAT is not referred to by specific name in the admissions standard. So along comes a test that actually is valid and reliable by every measure. It's about as predictive as the LSAT, not more predictive, but in that range. And after some back and forth and some politicking and some study and scrutiny, the ABA moved from what I would call a soft pass for a number of years of saying, “we’re probably going to grant you a variance if you apply to the GRE,” to now I remember I think it was around Thanksgiving a couple of years ago, they finally said, what the heck, and gave a general approval to the GRE. And they left it at those two tests. So it's not like you could submit any test. It has to be shown as valid and reliable, and the only two tests that historically met that criterion are the LSAT and the GRE.

Craig: Marc Miller, who's the Dean down at the University of Arizona, I think led that effort or certainly in the forefront of the movement to adopt the GRE. I think they were one of the first schools to announce.

Dan: The first, the first school that did that and that really opened up the floodgates. And we don't have time on this program, but I can't help but note that what I mentioned, politicking in the past and smile. I really think it was a, you know, in many respects it was an uphill battle. I mean, the LSAC has long had like a hegemony over testing. Even though they're a non-profit, they sell this test, and it's basically their core revenue source. So to the surprise of no one, they fought tooth and nail to keep the ABA from approving this test. I'm both sympathetic and not sympathetic to their battles in that regard. But at the end of the day, the ABA Council of which you've been a member, was asked to answer a straightforward question, is there any doubt that this test is valid and reliable under the standards that the ABA has for law schools, and the answer was no. There's every reason to expect it is valid and reliable.

Craig: But you mentioned before, and it is important, there is no specific test named in the standards. It's a valid and reliable test. The LSAT has been the presumptive test for a long time. Along comes the GRE, and I can just share it’s a matter of public knowledge. There was a lot of discussion within the Council about the GRE, but the introduction of the GRE as a potential alternative to the LSAT put the Council in the position of now having to decide which test would be valid and reliable. The Council is not made up of psychometricians or statisticians and I think one of the reasons that this whole area of what's required to get into law school in terms of a test was blown wide open by the fact that now we're going to have not only just competing tests but competing test administration companies lobbying for their various tests.

Dan: To use an old phrase that predates both you and I, the old saying, “if it ain't broke, don’t fix it.” And I can't speak for the Council members, but I'm sure there's a lot of folks in legal education that said, “well, it's not broken. We've used the LSAT for decades.” Schools have generally seen that as a key part of their admission cycle. The U.S. News & World Report uses the factors to that end in important ways for ranking. So what's broken that requires a significant alternative and I hope we will get into that question is what part is broken that may be cause for more competition. I'm a believer of more competition is better. So if there's another test that comes along that's not the GRE or the LSAT that also turns out to have great predictive value, I would hope and expect the Council would take that into account. The fact that it's administered by a private company, that's how it works.

Craig: I agree. And my position has been like yours that you know, we want to open the field to new ideas and to thinking about these things in new ways. I was always uncomfortable with the fact that we had pretty much created a monopoly for the Law School Admission Council with this standard, particularly if we weren't open to other tests being used as well. And I think that's not the position an accreditation agency should be in. And so my own view is that we should expand, at least look at the GRE and other tests to determine whether they could be equally or more predictive than the LSAT in determining whether students will be successful in the first year. And I should just point out that one of the reasons for having that kind of a test is so that law schools aren't simply admitting people who are going to fail or who have a high likelihood of failure in law school after having collected their tuition, then those students being in debt to the tune of tens of thousands of dollars and no career.

So I think there is value in Standard 503, which requires a valid and reliable test, a way of fine-tuning what's in Standard 501, which is a prohibition on schools admitting any student into their law school that doesn't have a reasonable likelihood of being able to succeed in that legal program and be admitted to the bar.

Dan: It’s about people's lives, like you said, not merely numbers on a page, those are their lives, their families lives, an enormous investment that it takes.

Craig: Right. So let's go back to that question about what's wrong with the LSAT. And we are getting to the JD-Next, we'll get to that in a moment but I kind of want to set this up so that people understand what's going on in the broader context. What are the problems with the LSAT?

Dan: They're intersecting and let me try to unpack it. So one point that you made before in passing, but I think we need to remind ourselves is if you actually look at the statistical reliability and there are statistics, of course, we measure this with data, accumulated an enormous amount of data. That while the LSAT and the GRE are good predictors of law school performance compared to other criteria like undergraduate GPA or anything like that, actually you will unpack the data it turns out they're not enormously successful predictors. Like you said they're better than a coin flip, but not a really, really reliable, seamless test that shows you.

So one problem with it is we've never been able to design the perfect test despite an enormous amount of effort. To be understandable, this is part of the larger conversation underway in higher education about admissions testing generally. We overweight them and all that. So one problem is just the lack of great reliability.

There's a second problem and that is schools overweight these admissions tests. They don't, as it were, impose a discount rate understanding that this is just one part of the process. They say and we say to the world, we engage in holistic admissions, we look at letters of recommendation, we look at interviews and all of that. But law schools I think not exempting yours or mine are also influenced to an enormous degree and thus so are students by U.S. News & World Report rankings of law schools. We have no control over those rankings, certainly no external control. And so historically those rankings have weighted the LSAT in a very high level and also undergraduate GPAs. And so law schools, like the rest of the world, respond to incentives, and so the incentive structure has meant that we've taken a test that we know has flaws and moderate reliability at most and given it enormous attention and greater influence than is warranted.

And one other key point, I said these are overlapping issues, the other thing we know about admissions tests generally, and this includes the LSAT and the GRE, is there is the delta between the scores that students of color, students from disadvantaged backgrounds, students from minoritized groups, get on the LSAT and the GRE and how they perform in law school is much bigger than we expect it to be. To put that in other words, there's a racial gap as it were that it’s hard to explain. I suppose our instinct would be that it's explained by history of discrimination, lack of access to testing resources to do well, and there's a number of explanations. It sticks out like a sore thumb and these things collide. So when we put enormous emphasis on admissions tests greater than the evidence warrants and we know those admissions tests reveal structural bias. I'm not going to make the point that they’re internally discriminatory, I think it’s a larger conversation that again I think is worth having but I think the jury's out on that.

But we know that there are structural problems in terms of their yield of students of color then we've basically created a very bad conundrum for the admissions prospects of minority students. And that's before we even get to the Supreme Court’s affirmative action cases which I'll leave for now, but I mean it's a perfect storm and it's not a storm that is helping with students from disadvantaged communities. It's just not.

Craig: As a dean, you may have had this experience, I’ve certainly had this experience many times, particularly from alumni of law schools where I’ve served who went through law school at a different period in time, maybe one where the LSAT wasn’t emphasized as much, who have said to me, “I'm lucky that I even got into law school because I had a really bad LSAT.” It seems to me at least in my experience almost a disproportionate number of folks who will tell me that are enormously successful as lawyers who have gone and have incredible careers. So it always struck me that in some ways we were keeping the gate in a way that was really precluding a lot of people who could be very successful as lawyers. And part of the reason for that I think is because the test, any standardized test is a snapshot at a moment of a set of things that reflect your ability to think, perhaps reflecting your background knowledge. But one of the reasons that I think the LSAT is really unsatisfactory in this regard is because it doesn't allow us to figure out what a person's capacity to learn is and specifically learn the kinds of things that you're going to deal with in law school. I mean I remember very well taking a course to prepare for the LSAT and thinking this is really odd that I'm doing problems about how many people can fit in the elevator and get off at floors when what I wanted to do was practice law.

Dan: Much against what you're hoping, you're not taking a course that helps you in your first year of law school, you're taking that course to help you do a test that will hopefully allow you to get into law school. And when you think about it from that level, and by the way, paying a pretty penny.

Craig: To your earlier point about the impact that has on minorities in law school, those tests cost and that also provides another obstacle for people not just of color but anyone who comes from a socio-economic background where it would be challenging to front all those costs. So that always struck me as odd. And so again, Marc Miller, who is a good friend and somebody I have a tremendous amount of respect for, has been really relentless in his entrepreneurial approach. After having led the GRE push, the next thing that we learned is that Marc and a few other schools that partnered with the University of Arizona including Syracuse again in the interest of disclosure, had another idea for addressing two things. One, better predictability about success in law school, number two something that's more aligned with what you actually do in law school so that perhaps we can be more efficient in your preparation for a test to get into law school might be aligned with what you will actually do in law school. So that's the genesis I think of JD-Next. So what is JD-Next?

Dan: I'm going to answer that but can I just have a preface on all this?

Craig: Sure.

Dan: Just to underscore kind of the community of deans and others that have been involved with this. Marc of course can speak for himself, and you can, and I can too, but I think the point was a number of us were looking for something that would thread this needle. It would give an alternative for like a pathway and just the way we've been talking about so that the test and the existing test do not become an obstacle. But here's a key point, at the same time we did not want to throw the baby out with the bath water. We still felt and we all do, yourself included, that it's important to be able to have something measurable that will help in predicting one's successful performance. And that's important to remind ourselves in this world in which there's a lot of folks who have been arguing for tossing out tests and not looking at measurable criteria.

So JD-Next is two-in-one. It has twin purpose. It is a program that students sign up for, it’s online, remote (not surprising in this world we’re in) over a period of time, I think six or seven weeks, that involves an actual intense engagement in legal materials. It happens to be contracts because that's really how they develop the program. But there’s nothing intrinsic about contract law as the exemplar of this, it could be another subject. But it has a combination of lectures, remote lectures, a number of assessments, feedback with you know TAs and others that really give you a very strong window into contracts certainly, but the experience of life as a first-year law student. So in that sense it is a pre-law program, sometimes they even call it a pipeline program, that other institutions have developed over a long period of time to give you again a window into what the first year of law school is like.

At the end of the program there is an assessment given, so there's a final test given and that complies with all of the kind of the testing psychometric kind of standards that one could develop that aspires to be a good predictor of how you'll do. Although in all candor when this program was developed, and it was developed as you say at the University of Arizona, they certainly had great confidence that they could if not replicate, then develop a pre-law program that would be a really good window into law school, current law professors teaching the materials. What was much less certain until they had actually had some data they could look at is whether the final assessment shows any particularly strong correlation between your success in JD-Next and your success in law school. Even if the assessment did not show a strong correlation, there's still a value in having the systematic pre-law program and a number of law schools I think were willing to say particularly since it was given away for free, sure there’ll be an opportunity to do that.

What the folks who administer the assessment learned though over a period of time of three years of sequential giving of the test, by a range of law schools by the way, they were giving the data and information about it, is it turned out to be an awfully good predictor of success in law school, as good a predictor as the LSAT and the GRE. And here's the kicker, without the discrepancies that the LSAT and the GRE had shown with respect to student performance from minoritized groups. So that was really something quite powerful. And it was peer-reviewed, I mean this was not just something that was a slogan from the JD-Next folks in a self-serving way, but this was an analysis that was done and replicated and ultimately published in peer-reviewed journals.

Craig: And I think that's what's so interesting and so important about this, is that as I said before, when you're looking at an applicant and trying to figure out whether they are going to be successful if you have only the LSAT score to look at, that doesn't give you much of a window into the way that they learn, the way they think, their ability to succeed given particular material to an outcome assessment. So I think that's one of the really important things. We tend to look at GPA as maybe giving a bit of a window there because it measures performance over four years of undergrad.

Dan: And persistence and you know all the kinds of qualities.

Craig: And of course we know that varies wildly based on the major that the student has earned in their undergraduate studies, whether it's psychology to MDs. And so this gives us essentially a test drive of law school for students who are interested. A very intense and shortened but intense exposure to law school material, measures their ability to assimilate that material and then understand it and do well in a test on that material as you said. Then there are a number of schools that participated in this project and provided the data to do this study. So now we have the JD-Next. What is the ABA view of JD-Next?

Dan: So it's an interesting tale I think of the evolution of understanding. If Marc were here, he would tell you that he fought what he saw as the good fight when he asked I think a couple of years ago, maybe he didn't really ask, he accepted on behalf of Arizona, a handful of students who had taken the JD-Next, only to be told by the ABA, quite understandably by the way, “that's not how it works, we have a requirement of valid, reliable admissions tests. You know this could be seen as an end-around around that.” And so I think taking that lesson what happened then was I think a period of time over the course of the next couple years in which there was an effort to develop more data. I think the ABA, you would know more about the internal part of this, but I think they were looking to maybe commission their own studies to develop some expertise. So the bulk of the information didn't just come from the University of Arizona and from schools that adopted it.

And they went through a process that I would see as very methodical and ultimately came to the conclusion just fairly recently that the way to really learn whether the JD-Next is part of an answer to the dilemma with respect to these admissions tests is to allow schools through the variance process to seek permission, but I think it's been readily granted my sense is, to use JD-Next in the next admission cycle, maybe there'll be several cycles, who knows how methodical this will be, to really find out how it's working. And the ABA has a key role in this. Obviously, they are the accreditor, but they can also help shepherd and steer the development of data so that we can learn more from the schools that accept the JD-Next and find out how successful it is working. Is it truly an alternative pathway? And if so, they're not going to require JD-Next or anything like it, but it becomes another alternative admissions pathway. And remember, it's a test and it's also more than a test. It has an assessment that is a final test that's graded, but it also is all the pre-law program and those both go hand-in-hand. So we're in that process now where schools have requested variances, we'll see what they do.

Craig: So I want to just point out parenthetically one of the reasons why there hasn't been an alternative to LSAT in the past is because this valid, reliable test requirement meant that you couldn't admit students on an experimental basis with some other measure. So the ability to experiment and innovate in this space was really constrained by the ABA by Standard 503, and you know again I have hats off to Marc Miller for really pursuing this. But I think that there was a lot of interest from schools who were curious about alternatives, and I think that's reflected in the number of schools that participated in the validation of the predictability of this test. But also the number who have requested variances from the ABA Council to use the JD-Next program. And I believe that number is somewhere around 35 schools that have requested variances, and we were one of those schools. And the ABA Council has created the ability for schools to fairly easily - you know, almost a template form - request a variance as opposed to sort of voluminous data that's often required when a school is seeking a variance for one standard or another. So a fairly streamlined variance option.

We took advantage of that, a number of other schools did and have permission now under the variance from the ABA to begin to use JD-Next immediately as we're in this current admission cycle building for classes that will begin next fall of 2024. So does this resolve the issue or what do you think is next?

Dan: There's three key audiences, Craig, in this regard and we've been focusing on the ABA as a key audience, and absolutely they are a necessary condition for there to be the kind of experimentation that we're talking about here. And again hats off to the ABA and the ABA Council and leadership for really destabilizing us to say, disrupting, we talk about disruptive innovation, they disrupted what's been again largely the hegemony of the LSAC, there's no two ways around it for as long as we've been in legal education. But there are two other audiences, the audiences are the law schools and law schools are not known for being tremendous innovators if I can paint with a broad brush. They're also considering what's broken in the current system, why should we take this chance, why should we potentially make enemies with LSAC and all of that. So hats off to schools that have embarked on this as at least an experiment going through that.

The third audience of course is potential students. So JD-Next needs, wants and needs a greater publicity and also just in the marketplace as it were, to be able to tell potential students, “now you have pathways that weren't available to you five years ago. The GRE is another alternative test that you can take, but here's a very different pathway.” And keep in mind, students might elect to take all of those pathways. There's nothing that stops them in this variance process from taking the LSAT and submitting an LSAT. Indeed some of the schools that have requested variances may continue to require these other admissions tests, nothing about the process tells the schools what they do or don't do with respect to other admissions tests. But if students believe that there is a critical mass of schools that will look at alternatives and will actually be able to take account those alternatives, then they will get the message, “I'm going to do this.”

It's a time-intensive process, it's not like they walk into a room for three hours and take a test. It's several weeks of their precious time and investment - ultimately some amount of money - although I think that is really important to get the message out that this is an alternative pathway.

Craig: And as we've noted before that the time and effort that goes into that, completing that six or seven-week course, taking the test is going to be directly relevant and useful as they begin law school.

Dan: Oh I think that's absolutely right. Let me make a comment that if Marc were here he might say, “don't say this, don't say this Dan,” but I'll say it anyway. It's wonderful what the folks at Arizona have developed and I think they're continuing to refine this. But this is also an opportunity for competition for other kinds of programs, alternative pathways that could be developed to really improve upon JD-Next or let a thousand flowers bloom as the saying goes. I mean, I think the time and intensity that went into this project, I know first-hand, has been enormous. And so it's not like you can just sort of in an afternoon build a new admissions test that is comparable. But this will create an incentive I think on the part of all stakeholder groups including the LSAC to look, take a close look at what it is doing in developing admissions criteria and hopefully generate a world in which maybe 10 or 15 years from now there are various different pathways to all come to the same place which is to admit excellent students, diverse students and to really pursue excellence in you know our admissions processes.

Craig: I want to go back briefly and just say that over the last year there's been a lot that's happened in this space again. So when I was in the Standards Review Committee, again around 2018, some work we did culminated in a recommendation from the Standards Review Committee to the ABA Council that Standard 503 be modified and the requirement of a standardized test either be eliminated or the definition of what kind of test counts rather to encourage more innovation. That proposal was adopted by the Council. As you know, the process for adopting new standards goes through the ABA House of Delegates. Now the ABA House of Delegates does not have a veto, but when a proposal from the Council is presented to it, the House of Delegates votes, if they vote down that proposed change to the Standards the Council can resubmit that to the House of Delegates the second time. If it's defeated a second time, the Council can go ahead and issue and administer that standard even over the objection of the House of Delegates. So the House of Delegates doesn't have a veto. But in 2018, the proposal to eliminate that requirement was actually pulled just before a vote in the House of Delegates.

Dan: I was there, I was sitting in the House of Delegates.

Craig: There was an intense lobbying effort by interested parties in shooting this thing down which was successful in having it pulled. So fast forward five years later and now as a member of the Council, I’m part of the group that's proposing this change that the LSAT or that valid and reliable test no longer be required. And again a major lobbying effort and a lot of opposition to this and it was again pulled by the Council before House of Delegates vote. One of the things that we heard in the process of that discussion was, we can't possibly eliminate this because first of all the proposal would not have said you can't use the LSAT, it would have just said that you don't have to use the LSAT. And the response was, “oh, oh my god, we can't adopt this change because what will we use, there's no other alternative.” I think that the JD-Next program offers that kind of an alternative.

Dan: A hundred percent. I think that recounting the history is really indicative. I think these are difficult issues, you and I might not agree on everything but I know in this respect we agree. There's a tension I think between what can be seen as the heavy-handed regulatory force of the ABA, it's worth pointing out as a number of deans have that the ABA is unique in requiring an admissions tests among professions. And so we should remember that's not novel to us because it's happened for decades, but it's unusual in the world of regulation on the one hand. But on the other there is the dilemma of worrying about law schools maybe even incentivized by the Supreme Court’s decision last July to say, “oh great, now we can basically use admissions criteria that don't take into account the kind of evidence-based factors that really help sort out students who will succeed and not succeed.”

I do think that's not an idle worry, but I don't necessarily think the answer to that is by maintaining the scheme of regulation that is anachronistic and again heavy-handed. And I agree, absolutely, when you look at a JD-Next alternative, then it answers the question, can't law schools develop mechanisms of admission that appeal to all of those important and vital needs? Because that's in the interest of the law school to do and of the students not because a regulatory authority wags its finger and says, “no you have to do exactly this.” So that's another reason to be greatly heartened by what the ABA's decision to allow for a very easy system of variances.

Craig: Let me just make an observation based on my experience with the Council. I think you are right, a lot of deans view the Council as heavy-handed at times. My experience is very different. I think that the Council has really smart people on it. In fact, I enjoyed being on the Council just because they engage with people who are really thinking in creative and forward-leaning ways about what kind of regulation there ought to be for a law school education. So there are a lot of people who are very open and encouraging of the idea of innovation and change and so when something innovative like repealing the 503 test requirement comes up, the Council is really being liberal there in almost deregulation. And the opposition comes from various groups whose ox is going to be gored if you will, the ones who often hold up that kind of innovation. On the other hand, where you see what might be viewed as heavy-handed regulation coming out of the Council that is often at the insistence of those same outside groups.

Dan: That's absolutely right, I mean I've had the great good fortune to teach and write over a long career in areas of law and regulation, administrative law and the like, and the story so often told is incumbents favoring regulation, right, paradoxically. How could that be, how could incumbent organizations favor regulation that seems to impact them? It's the way of American regulatory life in many respects. But I think I would underscore from the perspective of somebody outside of the regulatory field exactly what you say over the course of again my professional lifetime I've seen the ABA Council evolve to a point over a number of years thanks to the good, conscientious hard work of staff and Council members, which of course rotate, as being a solid voice in favor of innovation. Now you win some, you lose some. One innovation whether it favors one particular group of law schools or another - you know oxes are going to be gored, but it has both an affect and a real commitment to fixing what in many respects is a broken system. I think deans also do, although there are collective action problems that sometimes stand in the way. But anything that can work to break up the hegemony, and this is not just about LSAC to be clear, but anything that can be done to break up the hegemony of a very small handful of organizations who have had an outsized role in I think stifling innovation including, and here's where I'm going to get myself in trouble but what the heck, including the big ABA - which is not the ABA Council and Section on Legal Education. I think they need to stand down as it were and support efforts at innovating.

Craig: Incidentally, it's interesting the biggest threat to the Law School Admissions Test is as it turns out less likely to be the ABA Council than it is the change in the weighting formula of the U.S. News which has significantly reduced the weight of the LSAT as part of the U.S. News rankings. Very interesting, even after a lot of efforts to beat off a perceived ABA threat to the LSAT.

Dan: But of course, Craig, those can go in tandem too, right? Because, and this is an overly simplified way to look at the picture, but if more schools adopt the JD-Next as an alternative admissions test, at some point it becomes rather unlikely that the U.S. News & World Report can persist. And that's what happened with business schools by the way, as more business schools took the GRE as an alternative to GMAT, they significantly changed their weight of the admissions test.

Craig: Yeah the U.S. News first adapted to the GRE by creating a sort of parallel corollary score there and using, continuing to use that in the weights, but you're right. I think at some point that whole thing breaks down. So the bottom line is we now have an alternative that law schools can use called JD-Next as part of their admissions process to help determine an applicant's likely success in law school. This may put less emphasis on the LSAT or even the GRE going forward, all good and interesting developments in my view and I think in yours. We're about out of time, so is there anything else that you'd like to say about JD-Next before we go?

Dan: If anything, this is a message to law schools and law school deans which you and I, soon that'll be the end of yours, and it's been now for a few years the end of mine, but I know like you, we’re strongly committed to leadership in legal education and just really take great pride in the extraordinary work of deans, old and new and medium. And I would say that deans take some chances, you know what, the hegemony the LSAT has done, and you put your finger on it earlier, has reduced the willingness of schools to take chances on the student who might actually go and be a world leader as a lawyer but just something about their admissions test or their undergraduate experience or whatever gives pause. If JD-Next can help accelerate schools’ willingness to think outside the box and to take chances, those folks for whom law schools take chances they go out and be the next Ruth Bader Ginsburg or the next fill in the blank and that could be of enormous difference I think to the well-being and the welfare of access to justice and the rule of law. So if this helps in a tiny way in accelerating that development of innovation, I think Marc and his colleagues at Arizona will have done a really great thing for legal education and for the profession.

Craig: Well said Dan, I agree completely. It's been a pleasure to talk about these issues, just a pleasure to see you again.

Dan: Likewise.

Craig: Best to you. Thanks so much for the commentary this morning.

Dan: And thanks to Mike Spivey for bringing us together.

Craig: Yes, we're very appreciative. This is an important issue and thank you Mike and Spivey Consulting Group for addressing it. Thanks so much.

In this episode of Status Check with Spivey, Dr. Guy Winch returns to the podcast for a conversation about his new book, Mind Over Grind: How to Break Free When Work Hijacks Your Life. They discuss burnout (especially for those in school or their early career), how society glorifies overworking even when it doesn’t lead to better outcomes (5:53), the difference between rumination and valuable self-analysis (11:02), the question Dr. Winch asks patients who are struggling with work-life balance that you can ask yourself (17:58), how to reduce the stress of the waiting process in admissions and the job search (24:36), and more.

Dr. Winch is a prominent psychologist, speaker, and author whose TED Talks on emotional well-being have over 35 million combined views. He has a podcast with co-host Lori Gottlieb, Dear Therapists. Dr. Winch’s new book, Mind Over Grind: How to Break Free When Work Hijacks Your Life, is out today!

Our last episode with Dr. Winch, “Dr. Guy Winch on Handling Rejection (& Waiting) in Admissions,” is here.

You can listen and subscribe to Status Check with Spivey on Apple Podcasts, Spotify, and YouTube. You can read a full transcript of this episode with timestamps below.

In this episode of Status Check with Spivey, Mike interviews General David Petraeus, former director of the Central Intelligence Agency and Four-Star General in the United States Army. He is currently a Partner at KKR, Chairman of the KKR Global Institute, and Chairman of KKR Middle East. Prior to joining KKR, General Petraeus served for over 37 years in the U.S. military, culminating in command of U.S. Central Command and command of coalition forces in Afghanistan. Following retirement from the military and after Senate confirmation by a vote of 94-0, he served as Director of the CIA during a period of significant achievements in the global war on terror. General Petraeus graduated with distinction from the U.S. Military Academy and also earned a Ph.D. in international relations and economics from Princeton University.

General Petraeus is currently the Kissinger Fellow at Yale University’s Jackson School. Over the past 20 years, General Petraeus was named one of America’s 25 Best Leaders by U.S. News and World Report, a runner-up for Time magazine’s Person of the Year, the Daily Telegraph Man of the Year, twice a Time 100 selectee, Princeton University’s Madison Medalist, and one of Foreign Policy magazine’s top 100 public intellectuals in three different years. He has also been decorated by 14 foreign countries, and he is believed to be the only person who, while in uniform, threw out the first pitch of a World Series game and did the coin toss for a Super Bowl.

Our discussion centers on leadership at the highest level, early-career leadership, and how to get ahead and succeed in your career. General Petraeus developed four task constructs of leadership based on his vast experience at the highest levels, which can be viewed at Harvard's Belfer Center here. He also references several books on both history and leadership, including:

We talk about how to stand out early in your career in multiple ways, including letters of recommendation and school choice. We end on what truly matters, finding purpose in what you do.

General Petraeus gave us over an hour of his time in his incredibly busy schedule and shared leadership experiences that are truly unique. I hope all of our listeners, so many of whom will become leaders in their careers, have a chance to listen.

-Mike Spivey

You can listen and subscribe to Status Check with Spivey on Apple Podcasts, Spotify, and YouTube. You can read a full transcript with timestamps below.

In this episode of Status Check with Spivey, Anna has an in-depth discussion on law school admissions interviews with two Spivey consultants—Sam Parker, who joined Spivey this past fall from her position as Associate Director of Admissions at Harvard Law School, where she personally interviewed over a thousand applicants; and Paula Gluzman, who, in addition to her experience as Assistant Director of Admissions & Financial Aid at both UCLA Law and the University of Washington Law, has assisted hundreds of law school applicants and students in preparing for interviews as a consultant and law school career services professional. You can learn more about Sam here and Paula here.

Paula, Sam, and Anna talk about how important interviews are in the admissions process (9:45), different types of law school interviews (14:15), advice for group interviews (17:05), what qualities applicants should be trying to showcase in interviews (20:01), categories of interview questions and examples of real law school admissions interview questions (26:01), the trickiest law school admissions interview questions (33:41), a formula for answering questions about failures and mistakes (38:14), a step-by-step process for how to prepare for interviews (46:07), common interview mistakes (55:42), advice for attire and presentation (especially for remote interviews) (1:02:20), good and bad questions to ask at the end of an interview (1:06:16), the funniest things we’ve seen applicants do in interviews (1:10:15), what percentage of applicants we’ve found typically do well in interviews (1:10:45), and more.

Links to Status Check episodes mentioned:

You can listen and subscribe to Status Check with Spivey on Apple Podcasts, Spotify, and YouTube. You can read a full transcript of this episode with timestamps below.